Revolutionizing Enterprise Analytics: Cutting Costs with Data Products

As the demand for data grows, gaining access to the best data to support data-driven decision-making is becoming a significant expense. The methods to move and access data developed before the proliferation of computing and storage in the cloud, now struggle to scale efficiently to keep up.

Legacy data access technologies were not designed to handle the cloud's always-on-and-connected real-time capability. Before the cloud, data had to be stored close to the application for it to be analyzed. Even with the real-time nature of the cloud, the fundamental way that data is moved, merged, and prepared for analysis has not changed significantly. This lack of adaptability hampers the speed of analysis and overlooks opportunities to drive down infrastructure and data engineering costs.

In the following analysis, we will consider the costs of accessing data the traditional way using ETL (Extract, Transform, Load) and compare it to innovative approaches using federated data that harness the powerful capabilities of the cloud.

ETL Costs

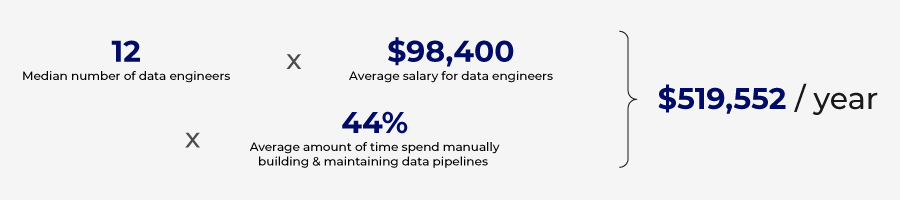

Calculating the exact cost of creating ETL pipelines is difficult, but we can estimate these costs by evaluating publicly available data and making some assumptions.

Building an ETL Pipeline from Scratch

Building an ETL pipeline requires a significant investment of time and resources. While multiple resources are required to create an ETL pipeline from scratch, a data engineer performs most of the work. This highly skilled professional manually programs the scripts to extract data, transform it for analysis, and load it into the target database. According to Glassdoor, the average salary of a data engineer in the US exceeds $150,000 per year; if you consider the total FTE cost of benefits and expenses, this costs $195,000 per year or $95 an hour.

Estimates show that creating a rudimentary ETL pipeline takes one to three weeks. Suppose we assume that the mean effort is 80 hours to build an ETL pipeline, which equates to $7,600 per pipeline. Additionally, these pipelines require maintenance, which might require 20% of the original effort every year or an additional $1520 annually. More complex ETL pipelines can take months or even years to build, costing hundreds of thousands of dollars. Simply building and testing one data connector can take six and a half weeks.

A survey conducted by Wakefield Research estimated that organizations spend $520,000 annually to build and maintain data pipelines.

No-Code ETL Platform

Coding and managing ETL pipelines from scratch can be expensive, but there are tools that can streamline the process and automate some of the coding requirements. Less complex ETL pipelines can be built using no-code platforms.

These platforms leverage automation and AI to reduce the time and skillset required to build ETL pipelines. Leveraging some of the tools currently available the ETL pipelines can be created in as little as three days.

While these platforms may lower the resource requirement to build pipelines manually, they come with a cost. Typically, these solutions are based on data volume and the number of databases connected to the platform. For larger corporations, these costs increase rapidly, and many edge use cases may not be supported by the no-code solution.

The number of pipelines will grow as the cost of building ETL pipelines with no-code solutions reduces significantly. This proliferation of ETL pipelines creates a new problem: data duplication and rising storage costs.

Storage costs

Storage strategies come in various configurations and architectures, making precise storage estimations quite complex. But, based on publicly available data, we can quantify the costs associated with storing and managing duplicate data created by ETL strategies.

Each time a data set is extracted from one system and loaded into another; a duplicate data set is created, which needs to be stored. The more pipelines and data requests, the more duplicate data sets are created, driving up storage costs.

The growth of big data and the prolific data movement has led to an increase in redundant, out-of-date, and trivial (ROT) data maintained in data stores. Statista reports that 8% of all data held by enterprises is original and 91% is replicated. Veritas Technologies executed a similar research project and found that 16% of data is business critical, 30% is Redundant Obsolete Trivial (ROT), and 54% is dark data, where the value of the data is unknown. Both studies come to a similar conclusion: an overwhelming amount of useless data is maintained by enterprises, leading to significant amounts of resource wastage in storing what is useless data.

If you consider Google Cloud charges $.02 per GB per month for cloud storage, that is $20 per terabyte and $20,000 per petabyte. According to Veritas Technologies, the average organization spends $650,000 annually to store non-critical data.

Multiple factors are driving the growth of ROT, with the maintenance of data silos being a significant driver. With every business function maintaining its own database to support each operation, leading to common data sets being repeated across many of these databases, thereby wasting storage resources.

Bad Data Caused by ROT

Cost of Governance

Storing ROT not only has storage cost implications but also increases risk. Multiple copies of the same data set lead to conflicting sources of truth, and various data formats lead to confusion.

To avoid poor data quality, effective data governance policies must be implemented. In 2021, Gartner estimated that poor data quality costs organizations an average of $12.9 million annually.

Traditional manual data governance processes are no longer sufficient, and investments in automated data governance tools and strategies, are required. Manually vetting reports and setting up custom rules are time-consuming. Implementing these policies, rules, and oversight independently for each ETL pipeline requires careful attention and time investment.

Investing in preventing bad data is money well spent. If it costs a dollar to prevent bad data, it will cost $10 to fix it and $100 for failure. The Data Warehousing Institute says bad data costs companies $600 billion annually.

Redundant data also poses privacy risks. Much of the data that is replicated across data silos includes PII data (personally identifiable information). This approach increases the probability of a data breach.

Challenges Will Only Grow

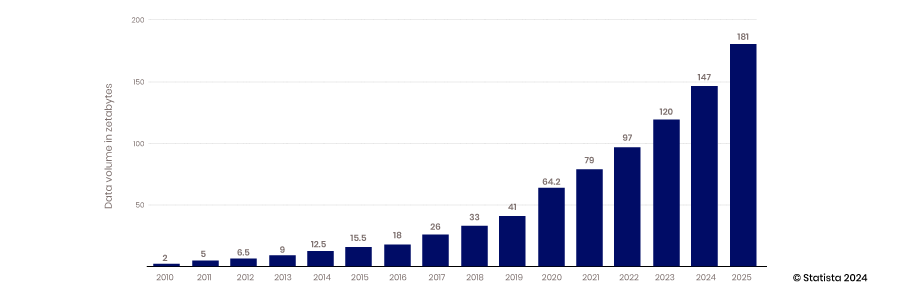

The continued exponential growth of data collection and storage will only exacerbate the problems around duplicated data created by inefficient data integration and management strategies. Statista estimates that by 2025, 181 zettabytes will be created, consumed, copied, and captured.

Soft Costs

With the time required to develop ETL pipelines from scratch or using no-code platforms, data access is not as agile as it could be. Opportunities are lost when analysts and decision-makers cannot access quality data quickly. These opportunity costs are difficult to quantify but are very real. With the number of decisions made across an organization, increasing the time to insight even marginally is significant. By optimizing decision-making across an organization, the opportunity cost savings compound as good decisions lead to even better decisions and options.

New Paradigm

A new approach or data access paradigm is emerging that will reduce the costs of data access and management. This approach moves away from ETL and focuses on central governance, security, and access around data products. (To delve deeper into the New Data Paradigm, be sure to read this blog post)

This new approach provides access to data without having to move the data or replicate it. This strategy also leverages reusable data products that eliminate the need to create ETL pipelines for every use case. This shift can result in 40-50% time savings to provision data for self-service, amounting to $4,100 savings per individual pipeline, or $225,000 for the typical organization spending resources on ETL pipelines.

Since the need to move data from one database to another via an ETL process is eliminated, storage costs are reduced. With no redundant data created from ETL pipelines, storage and prep costs can be reduced by 30-40%.

Reducing Costs

This new paradigm leverages data products to deliver data to analytics platforms, thereby reducing the effort and costs required to create these data products as compared to data pipelines. They take less time to create and require less expensive skill sets. It takes about 24 hours to create a data product, which is 70% less than to create a rudimentary ETL pipeline. Also, the work can be conducted by a data analyst instead of a data engineer. Salaries for data analysts in the US average $77,000 or a total FTE cost of $100,000. This cost equals $50 an hour vs. $96 for a data engineer. Doing the math based on these estimates, the cost to create one data product is $1200 compared to $7600 for a single simple data pipeline.

The new data product approach reduces the demand for data storage, but real-time access to data in its place increases network and database processing costs. While there is a tradeoff, networking costs are only incurred when valuable data is delivered for analysis, unlike storage costs that are incurred by storing useless and unused data.

The advancement in data governance automation also drives significant cost savings in today’s data management landscape. Automated governance includes automating data classification, access control, metadata management, and data lineage tracking. Data governance solutions enable organizations to leverage algorithms and workflows to automate the application of data policies, monitor data usage, and address data quality issues before they become an issue. Informatica estimates that organizations can save $475,000 to $712,000 using automated governance solutions.

Typically, these solutions are stand-alone packages bolted onto your data pipelines, costing about $20,000 per year for 25 users. The data product platform approach puts governance at the center of the process and is included in the cost of the platform.

Economies Powered Enabled by Data Products

Typically, ETL pipelines are built for one specific use case, with the benefits they provide required to outweigh the costs to build them, making their value relatively well understood and static. The adaptability of data products makes their value more scalable. With data products built on a standard platform, multiple data products can be easily combined to create new data products. Also, a specific data product may be intended for a particular use case but can easily be adapted to another opportunity to add value in a separate application.

This adaptability allows data products to increase in value as they can address new use cases that the original developer may not have envisioned. As the value increases and the cost to create that data product stays stagnant, the return on that investment grows. This is another way that data products are helping drive down costs to deliver new insights and value.

There are numerous ways in which a data product strategy reduces costs, enabling better decision-making and AI training. While the data product strategy helps reduce costs, the real benefit is tied to increased agility and competitiveness. This benefit is compounding and unquantifiable but very real.

Request a Demo TODAY!

Take the leap from data to AI